In my Robotic Vision course, our team developed a high-speed vision system that could track and catch baseballs in real-time using stereo cameras. The system had to process stereo image pairs, calculate the ball’s 3D trajectory, and command a robotic catcher to intercept the ball – all within a fraction of a second.

Technical Challenge

- The baseball traveled at approximately 55 mph over a 40-foot distance

- Total flight time was only about 500 milliseconds

- The system needed to capture and process 20-30 image pairs, estimate the trajectory, and move the catcher within 200-300 milliseconds

- The robotic catcher had a mechanical delay and maximum travel time of about 150 milliseconds

Implementation

Our solution used a state machine architecture with several key components:

Ball Detection

We first needed a method to detect if the ball had been thrown. For efficiency, we only used the left camera image and cropped it to the region where the ball exits the pitching machine.

First, the initial image was saved as the background for reference. Next, we calculated the absolute difference between the current image and the background, followed by a blur and binary threshold. If the number of white pixels exceeded our minimum threshold, the system would recognize the ball had been detected and would transition to tracking. Otherwise, it processed the next image until detection occurred.

Ball Tracking

The tracking system processed stereo image pairs using our calibrated camera parameters to locate the ball’s position in each frame. After some basic image processing (absolute differencing, median blur, and binary thresholding) we used contour detection to find the ball’s outline and applied a minimum enclosing circle fitting algorithm to approximate its center and store that information.

Dynamic Region of Interest

To optimize processing speed, we implemented a dynamic region of interest that followed the ball’s movement across frames. After detecting the ball’s center coordinates, our system would automatically recenter the processing window around those coordinates and adjust the window size to accommodate the ball’s increasing visual size as it approached the cameras. This adaptive approach significantly reduced the computational load by processing only the relevant portion of each frame.

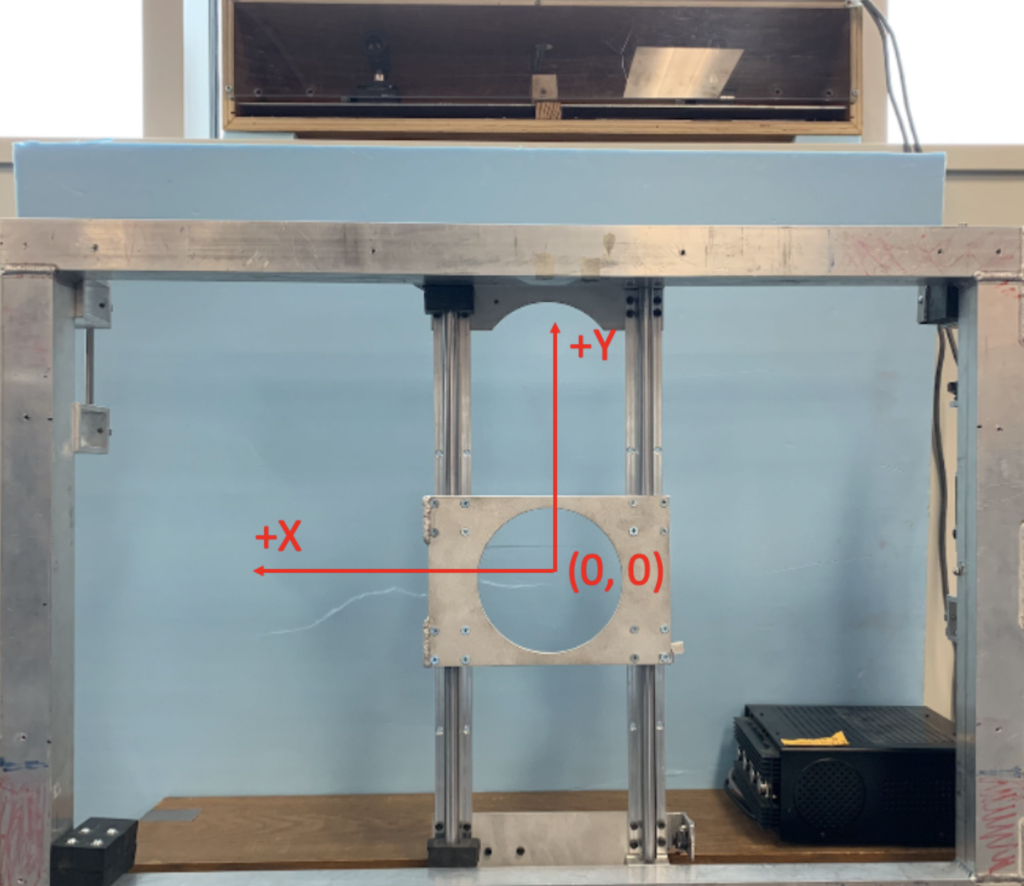

Trajectory Estimation

Using pre-calibrated stereo cameras, we reconstructed the ball’s 3D flight coordinates by comparing its position in both camera frames. Trajectory estimation was triggered after collecting 25 and 30 frames, ensuring enough data for the catcher to move to the intercept point and refine its positioning. Pixel coordinates were transformed into 3D coordinates relative to the cameras and then mapped to the catcher’s frame of reference. Polynomial curves fitted to the ball’s path in the side and top-down views predicted the vertical and horizontal interception points. Safety constraints prevented catcher motion if the intercept point exceeded the catcher’s mechanical range, protecting the hardware from damage.

Results

Our system successfully caught 17 out of 20 catchable balls with the remaining 3 just bouncing off the rim. These values come from a continuous set of throwing, pitching until we had successfully thrown 20 potentially catchable balls which led to a large number of pitches wherein the ball left the catchable region.